If a plane is in trouble, a computer won’t panic.

So is it time for humans to leave the cockpit?

That question is provocative enough in the abstract. But it grows increasingly difficult to answer in light of two horrific air disasters of 2014. The March disappearance of Malaysia Airlines Flight 370 and all 239 persons on board continues to confound investigators nearly one year later. The more recent crash of AirAsia QZ8501 on Dec. 28 is equally troubling, despite success in locating the crash site and the plane’s black boxes.

Little is yet known about the interaction between the human pilots and automated systems aboard those flights, and how those interactions contributed to each disaster.

So what is known? With pilots at the controls, we actually are enjoying a period of unprecedented safety in the skies. In the U.S., statistics from 2008-12 put the odds of dying on any flight at one in 45 million. And while much of that safety is due to advances in automated, computer-driven systems, those same advances often bring unforeseen challenges for the humans charged with managing them.

The Michigan Engineer recently looked at some of those issues.

Ready for takeoff?

Somewhere over northern New Mexico, a pilot and co-pilot felt a shudder as something struck the airplane. Immediately, the plane’s systems set to work testing out its various control surfaces, such as the flaps and rudder, to discover its new limitations. Then it mapped updated instructions to the pilots’ hand controls that would allow them to maneuver in spite of the damage.

Whatever it was had taken out one side of the tail. With damage to the horizontal stabilizer and elevator, it would be harder to control the altitude and descent rate. This meant that the pilots would have to land at a much higher speed than normal, and they needed to start preparing for that landing now.

The pilot called up the Emergency Landing Planner on the flight computer. It quickly assessed the nearby runways, the possible flight paths, the weather, the risk that the plane might pose to people on the ground, and how quickly help could arrive.

Within a few seconds, it had ranked many promising routes, with Clovis Municipal Airport in New Mexico at the top. Cannon Air Force Base, sporting a much longer runway, drew the pilots’ eyes. But the weather was too poor there – the wind was blowing across the runway, which spelled trouble for a plane with a damaged tail.

Clovis offered a headwind, allowing the plane to travel slower with respect to the ground as it came in to land. The pilots hoped it would be enough as they turned the plane toward the smaller airport.

With this choice, odds are good that these pilots would land the plane in relative safety, but in truth they were safe the whole time. This is one of the scenarios that five teams of professional airline pilots faced as they flew in a simulator at the NASA Ames Research Center at Moffett Field, California.

A group of researchers in the Intelligent Systems Division was testing its Emergency Landing Planner software – a type of program first developed by a researcher now at U-M. Although NASA is best known for space exploration, the National Aeronautics and Space Administration has always played a strong role in atmospheric flight innovations.

The team, led by David Smith, hopes the planner will help pilots find the best landing site and route to take, potentially saving lives. The test was encouraging, with the pilots saying such a tool would be welcome in their cockpits, Smith reports.

“It’s allowing people to make faster decisions and take more information into account,” he says. “If you can make the right decision quickly, it helps a lot.”

What’s the plan?

In its current iteration, the planner only acts as a guide, a bit like a GPS route planner in a car. It doesn’t choose the route or fly the plane. Still, it demonstrates that a computer can assess a complex and unexpected situation, a skill that previously set human operators apart from machines.

Those who defend our reliance on pilots often point to unexpected emergency scenarios as the reason why we need humans in the cockpit. Sure, a drone can handle routine flight, but can it come up with a way to save the day when it encounters a problem that isn’t in the plan?

This kind of software challenges that view. Expanded to the point where it could choose the route and load it into the autopilot system, such an emergency lander would represent a computer capable of handling a midair crisis – a machine to call on in a “mayday” situation. And then, would we still need pilots?

View from the cockpit

Right now, with pilots at the controls, we are enjoying a period of unprecedented safety. For example, statistics in the U.S. show if you flew on three commercial flights every day, you could expect to experience one fatal crash in 40,000 years.

And yes, modern pilots deserve some credit. Patrick Smith, first officer for a commercial airline as well as an author and columnist on aviation, says the autopilot is overrated.

“Millions of people out there think that planes are programmed to fly themselves and pilots are sitting back,” he says. “It’s one of the most misunderstood and exaggerated aspects of commercial aviation.”

State-of-the-art automation can handle all physical parts of routine flight, but pilots tell the plane what to do and handle any changes to the plan that may arise from weather, traffic at the airport, or other circumstances.Currently, pilots receive flight plans from a dispatcher for the airline, which the pilots review before the plane takes off. At the gate, the pilots fire up the plane’s electronics and automated systems – among these, the flight management system. They plug in an outline of the flight, including points that the plane will pass by on its route, the sequence of climbs that will take it to cruising altitude, the descent at the destination airport, and winds and weather along the way.

Then, the pilots fly the plane through takeoff until they hand off control to the flight management system. Patrick Smith compares it to cruise control on a car.

“Cruise control frees the driver from certain tasks at certain times, but it can’t drive your car from L.A. to New York. Automation can’t fly a plane from L.A. to New York either,” he says.

Even with the autopilot on, both pilots often become completely occupied with tasks such as updating the route to avoid a storm or making changes ordered by air traffic control.

While planes are capable of auto-landing, Patrick Smith says it is rarely used. “More than 99 percent of landings are performed by hand,” he says. Unless the pilot can’t see the runway, it’s easier to fly a successful landing than program one in.

An imperfect balance

The current safety record in aviation represents a substantial change from the 1970s, when more than 30 passenger flights on U.S. carriers ended in fatal accidents. In the decade from 2004-13, that number was just four.

Much of the credit for this improvement goes to computer-driven systems on airplanes, known collectively as flight deck automation, that handle aspects of flight for the pilots. These systems allow pilots to perform higher-level planning tasks, such as anticipating challenges like bad weather. Sensors and software can alert pilots to issues such as a potential stall or mechanical problem. In some cases, the automation even handles the problem for the pilot.

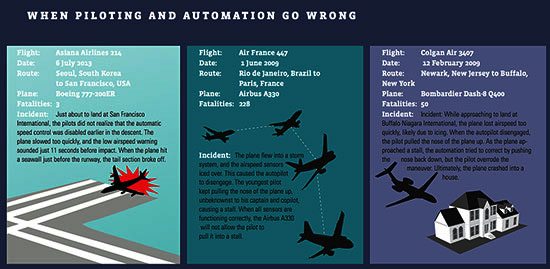

However, automation also has introduced new challenges that played a role in a number of mishaps, including the Colgan Air and Air France crashes of 2009 and the ill-fated Asiana Airlines landing last year (see graphic). In all these cases, due to a combination of poor feedback, insufficient training, and unusual conditions, the pilots lost awareness of the plane’s status with fatal results.“The flight crews failed to either notice or understand what the systems were doing and why they were acting the way they did,” says Nadine Sarter, a professor of industrial and operations engineering at U-M.

In most cases, pilot error is blamed for such accidents. But Sarter argues that plane crashes almost always result from a combination of factors involving the systems as well as the pilots. Also, she suggests pilots get short shrift when it comes to accident reporting.

“Lo and behold, 75 percent of all aviation accidents are attributed to human error,’” she says. “What we need to look at as well, however, is the figure that shows, for every year, the number of incidents that did not turn into an accident because a human got involved. That is the fair comparison.”

Firm numbers aren’t available, but the Aviation Safety Reporting System, an incident reporting system run by NASA, offers some insights. Anyone involved in aviation operations is encouraged to report safety-related incidents. The program is confidential and anonymous, and the reports can be used to identify and study safety concerns. On average, the database receives more than 6,700 incident reports per month, including submissions from private pilots.

Some reports describe straightforward human errors, such as setting the wrong altitude, but others reveal human pilots reacting to automation that is behaving in an unexpected way – for instance, the autothrust system isn’t maintaining the right speed or instruments go down. The pilots may change the plan, for example postponing the landing until they can either get the equipment working or make do without it. These incidents typically end with an undramatic landing because the pilots took the right corrective action.

Many pilots have seen instruments giving conflicting information, requiring judgment calls, and GPS or on-board computers failing. They remain skeptical of staking passengers’ lives on the performance of automation. Many passengers, who see their own computer monitors freeze in grayscale or throw up the “blue screen of death,” are equally wary.

Different solutions

Sarter maintains the problem is not the humans or the automation alone but rather the way humans and automation interact. “I used to call it John Wayne automation – it’s strong and silent,” she says. “It’s not a team player. We haven’t turned it into something that truly collaborates with the pilot.”

In particular, she is interested in determining how much power and independence to give the automation. The best balance would provide gains in efficiency and precision while also keeping pilots in the loop so they can quickly and effectively take over from the automation when necessary.

This transition of control is where current automation often creates problems. “In some cases, it does not provide proper feedback to help pilots realize they need to get involved,” says Sarter.

In the case of Asiana Airlines, for instance, the pilot at the controls didn’t realize he had disabled the autothrust system, setting the engines to idle. He and the monitoring pilot knew they were descending too quickly but couldn’t determine why.The warning that the plane was moving too slowly sounded 11 seconds before impact, when it was already too late. The pilots could not gain enough speed to avoid crashing into the seawall. While this doesn’t excuse the pilots for failing to notice the thrust mode, it does reveal a path toward safer automation that gives clearer indications of system modes and sounds warnings earlier.

Communication between the automation and the pilots is another of Sarter’s interests. Because pilots are increasingly overloaded with visual and auditory information, she develops new ways to communicate through the sense of touch, using patterns of vibrations on the skin.

“Such ‘vibrotactile’ feedback is well suited for presenting spatial information, such as the location and movement of surrounding aircraft, and early notifications of problems that do not yet require highly disruptive auditory alerts,” she says.

Talk to me

Poor communication between automation and pilots also contributed to the crash of Colgan Air Flight 3407. The automation did not give visual or auditory warnings that the plane was approaching a dangerously slow speed. Instead, the autopilot abruptly handed control back to the pilots with a stall warning. Startled, the pilot took the wrong action, raising the nose of the plane, which ensured it would stall.

Ella Atkins, a U-M associate professor of aerospace engineering who develops autonomous flight systems, including early emergency landing planners, pointed out that the pilot flying Colgan 3407 was exhausted and had failed tests. While these factors spurred new regulations, her trust in the competence of the pilots is limited. “I want that automation up front that slaps them on the wrist if they try to kill me,” she says.

Atkins is working on a new system that would monitor both the pilot and the autopilot for actions that could create a dangerous situation. This system could then intervene.

“It is a watchdog that only takes short-term action to mitigate risk, offering a quick ‘No, you can’t do that,’ then restoring full control to the crew,” says Atkins.

Already, technologies like this are finding their way onto airplanes. Air France Flight 447, the Airbus A330 that crashed into the Atlantic, was equipped with anti-stall technology that would have leveled the plane off if all the sensors had been working – regardless of pilot input.Unfortunately, after reverting to manual piloting when the airspeed sensor froze over, the plane could only sound the stall alarm. Neither the more experienced co-pilot nor the captain realized until the final seconds that their younger partner had been holding the plane in a stall.

Not all losses of airspeed data end this way. Also in 2009, two Airbus A330 planes experienced the same failure, but their pilots followed the appropriate procedures for keeping the plane under control until the airspeed sensors unfroze a minute or two later. It didn’t make the news when these flights landed safely at their destinations.

While the Colgan and Air France pilots failed to save the situation, in both cases it was the automated systems that failed first. Many researchers are looking for ways to make the automation more robust.

For instance, a technique called sensor fusion could have allowed the Airbus A330 computer to compensate for the missing sensor data through estimates based on other readings. Then, the flight management system could set more conservative limits for maneuvering just as the successful pilots did.

Self-flying planes

As a developer of fully autonomous drones, Atkins believes the day computers can fly more safely than human pilots isn’t far away. In contrast to Patrick Smith, she says automation is already capable of flying from L.A. to New York, provided it doesn’t encounter failures, air traffic control instructions, or other disruptions that current technology relies on humans to handle.

Innovations that could give the automation more flexibility are backlogged as the Federal Aviation Administration (FAA), which proposes and enforces aviation regulations, decides how to certify software that doesn’t always do exactly the same thing.

Innovations that could give the automation more flexibility are backlogged as the Federal Aviation Administration (FAA), which proposes and enforces aviation regulations, decides how to certify software that doesn’t always do exactly the same thing.

One such technology is the adaptive controller – the software that figured out the new rules for flying the damaged virtual airplane in the NASA experiment. David Smith says the pilots found it to be particularly helpful. The FAA, however, is not a fan.

“Adaptive control, because of its maturity, has gotten to the point where that clash with the FAA has happened,” says Atkins.

The controller relies on sensors to tell it how the plane responded to each tiny maneuver, and those sensors can give different readings in the same situation. This means the controller can make mistakes if it gets enough bad sensor data, she explains. For that reason, the FAA deems it unreliable and won’t certify it.

This annoys Atkins because programs that offer the flexibility to adapt to different situations are inherently unreliable by this definition. Instead, she wants to see these systems measured by whether they are as safe as humans.

Even for the Emergency Landing Planner, which does not have this perceived reliability problem, David Smith estimates it could take 20 years just to make it into the cockpit systems of private planes, though it could be available as a tablet application much sooner. Commercial aircraft would take even longer. But he is optimistic that in a decade or so, airline operations centers might use it to provide guidance to pilots in distress.

Still, companies like Amazon continue to push the boundaries of autonomous flight. At a recent conference, Atkins heard representatives of the delivery drone initiative report that they were collaborating with NASA on how to get drone technology certified for civilian use.

Regulatory paralysis

They face an uphill battle. At present, commercial drones are banned as the FAA claims control of the airspace all the way to the ground. Atkins believes that eventually, public and private property owners will be allowed to make decisions about their own airspaces.

The other challenge is that the FAA is presently defining unmanned aerial vehicles as “remotely piloted” – meaning there has to be a person on the ground in control of the aircraft. In spite of their differences, Atkins, Sarter, and Patrick Smith all agree that remote piloting is particularly dangerous.

The pilots on the ground have all the disadvantages of data link delays and slower reaction time without the benefit of being able to use their senses to gain additional information. Atkins would rather leave the decisions to the on-board computer.

Then there’s the catastrophe that could follow a loss of data link. At the very least, David Smith notes, these aircraft would need an autonomous emergency lander that places the safety of people and property on the ground as its highest priority.

After we are used to little drones in the sky, Jim Bagian, a professor of industrial & operations engineering at U-M and former astronaut, suggests we would be more likely to accept cargo drones flying into airports, carrying packages between cities, well before fully automated passenger flights.

In 2009, DIY Drones creator Chris Anderson claimed FedEx founder Fred Smith said he wanted autonomous aircraft for this purpose, but FedEx declined comment on whether the company was actively pursuing such automation.

Only after the technology has been accepted by the FAA and proven in cargo planes will the FAA, the public, and airlines be likely to even begin considering phasing out passenger pilots. By then, it may be a matter of activating autonomous function in existing airplanes, since large cargo planes are often special versions of the leading passenger models.

A question of motive

But why the interest in getting rid of pilots? Bagian points out that top salaries are a major expense, since senior first officers for major airlines can earn more than $200,000 per year and some captains claim salaries topping $400,000.

“Airlines might be saying, ‘Our biggest avoidable cost next to fuel is the pilots,’” Bagian says.

On routes flown by these top earners, a fully automated plane could save the airline over $2 million a year. That’s not even counting higher revenue from the extra passenger space with an exceptional view.

Bagian sees it as a political problem for the airlines rather than a genuine safety risk. “They want the FAA or somebody to say, ‘We think this is safe,’” he says. “So now it’s not that they’re a money-grubbing, mercenary, no-good commercial enterprise … It’s been to the Congress and the FAA. Everybody approved it, and they’re just doing what’s approved.”Atkins notes because of the way the public tends to react, a cargo drone crashing into a populated area could put a company like FedEx out of business. We, and the juries we form, find it easier to forgive when we believe a human did everything he or she could to save the situation. We’re even more forgiving when that human is among the dead.

Still, we stand to gain more than just cheaper fares. Atkins says busy airports could be even busier with a machine at the controls.

“Separation and density of traffic on approach and from departure at major airports is really constrained by human reaction times,” she says. Human pilots need enough time to see and coordinate with other aircraft. With the precision offered by sensors and automated flight, more runways could fit into the airport’s grounds, and planes could arrive and depart at shorter intervals. “This has the potential to reduce delays and increase fuel efficiency and time efficiency throughout the entire air transportation system,” says Atkins.

An uncertain future

Bagian projects it is unlikely we could see the first autonomous passenger flights before 2035. Atkins estimates the first cargo drone to fly in and out of airports could be in service by 2030. But a lot would have to happen first.

Right now, with even small, autonomous, commercial drones forbidden from flying outdoors over uninhabited land, we as a society are missing out on opportunities to gain from autonomous technology and discover its limitations. Atkins particularly likes agricultural uses, such as crop dusting, pest detection, and moisture monitoring.

The latter applications could reduce pesticide and water use, while the former would make it unnecessary for humans to risk their lives doing the stunt-like maneuvers needed to fly a crop duster efficiently. And, when a crash occurred, the drone would likely go down in a field where it wouldn’t harm anyone.Researchers like Atkins could use data from these drones to find out how often they crash, as well as how to prevent those failures. When it comes to computer reliability, Atkins points out existing planes rely on computers for critical functions that keep them in the sky. To ensure a computer crash won’t lead to a plane crash, these aircraft typically have three or more computers handling the same functions.

One true weakness is computer vision – the automation can’t make out obstacles on the runway nearly as well as humans can. This field is rapidly improving, thanks in part to driverless cars, but it’s not yet ready for prime time.

Fasten your seatbelts

Other challenges will have to be tackled by the entire field. For autonomous planes to fly seamlessly in and out of airports, voice communications must be replaced with data links, and every airplane would have to be equipped with a device that would tell its location to other aircraft.

“Having an air system of autonomous flight would require basically rebuilding the entire civil aviation infrastructure all over again,” says Patrick Smith.

Atkins holds that the cost is exaggerated, and an industry that represents over a trillion dollars a year in economic activity can support the multi-billion-dollar investment. It should save money and time in the long run.

Still, Sarter and Patrick Smith doubt this justification is enough. To them, it certainly doesn’t serve the public’s interest to give up the people on the plane who know how it flies and who face the same life-and-death stakes as their passengers.

No matter the side of the argument, it always comes back to safety. Perfect safety is impossible, so the question Atkins and Bagian are interested in answering is when is automation good enough?

Would it be enough when automation could save as many lives as pilots do, or would we demand a higher standard?

“It’s just like Dumbo’s feather,” Bagian says. “The mother says, ‘Hold the feather, Dumbo. That will let you fly.’ So is having a pilot Dumbo’s feather?”

Not yet, but the day isn’t far away. And then, what will we choose?

JoAnn Stewart - 1968

Why doesn’t the weather information (as detected by navigational computers), such as true wind, direction and speed, as well as temperature and altitude etc., get transmitted to a ground weather relay system so that not only weather can be forecasted more accurately, but can also be used to inform other aircrafts electronic systems ‘s the area?

Reply

JoAnn Stewart - 1968

Why can’t data such as velocity, vertical/horizontal, attitude, g-meter, etc. be recorded within the flight data recorder and POWERED BY BATTERY in case of loss of electronic inputs? This could go to a high-speed cache system and be useful to determine loss of power on the aircraft.

Reply

William O. Bank - MD 1967

The evaluation has already been written: “The Glass Cage: Automation and Us” by Nicholas Carr. Automation in the cockpit is supposed to help the pilot, not take over for him.

In about 1967, my brother landed an F-4 on the carrier deck after its tail had been shot off over Kep (I have a picture of it). In the 80’s, as head of the Navy’s Safety Officer Training Program at the Naval Post-Graduate School in Monterey, he landed an F-18 Simulator on the simulated carrier after the instructor had failed all computer systems — the instructor commented that “no one has ever done that in the simulator or in the aircraft” to which my brother replied, “Without that other stuff (the computers), it flies like a real airplane.”

The authors mention the crash of Flight 447, the Airbus A-330 that crashed because “Neither the more experienced co-pilot nor the captain realized until the final seconds that their younger partner had been holding the plane in a stall.” The side-stick on the Airbus is like a video-gamers stick, something to provide input to the computer that “will take it from there.” Unlike the Boeing aircraft, the pilot’s stick and the co-pilot’s stick are not mechanically connected, so neither knows what the other is saying to the computer. The crash was not caused by pilot error — it was caused by the engineers that started from the preconception that the computer could do it faster and better.

The infamous landing in the East River reflects two fascinating factors. It was not really caused by the bird-strikes (“bird-ingestions”) in both engines. That only started the problem. The COMPUTER in the Airbus realized that if power was maintained, it would damage the engines, SO IT SHUT THEM DOWN. If power had been maintained, they might have landed at Teeterboro with damaged (no, destroyed) engines. But the computer shut them down to protect them. The pilot, however, had experience as a glider pilot — something he did for fun. Since the rope that tows a glider into the air breaks on occasion, glider pilots routinely identify where they will land if they suffer a “rope break”, and in powered aircraft, they look at the terrain in the same manner — where will I land if I lose power. The pilot knew that his only option was the East River, and he did a very good job of it !!

The authors need to fly a bit in gliders (“If flying is a language, soaring is its poetry.”). Then they need to read “The Glass Cage.” Then they need to fly a bit more in gliders BEFORE they try to design systems that will replace the pilot (human ?). CAUTION: “The Glass Cage” can be considered to be a discussion of technical things. Actually, it is pure philosophy, something that computer scientists and engineers need to spend more time studying before they are given a PhD …

Reply